Models

Deploy with Hugging Face Models

Hands-on tutorial for launching and deploying LLMs using Friendli Dedicated Endpoints with Hugging Face models.

Hands-on Tutorial

Deployingmeta-llama-3-8b-instruct LLM from Hugging Face using Friendli Dedicated Endpoints

Introduction

With Friendli Dedicated Endpoints, you can easily spin up scalable, secure, and highly available inference deployments, without the need for extensive infrastructure expertise or significant capital expenditures. This tutorial is designed to guide you through the process of launching and deploying LLMs using Friendli Dedicated Endpoints. Through a series of step-by-step instructions and hands-on examples, you’ll learn how to:- Select and deploy pre-trained LLMs from Hugging Face repositories

- Deploy and manage your models using the Friendli Engine

- Monitor and optimize your inference deployments

Prerequisites:

- A Friendli Suite account with access to Friendli Dedicated Endpoints

- A Hugging Face account with access to the meta-llama-3-8b-instruct model

Step 1: Create a new endpoint

- Log in to your Friendli Suite account and navigate to the Friendli Dedicated Endpoints dashboard.

- If not done already, start the free trial for Dedicated Endpoints.

- Create a new project, then click on the ‘New Endpoint’ button.

- Fill in the basic information:

- Endpoint name: Choose a unique name for your endpoint (e.g., “My New Endpoint”).

- Select the model:

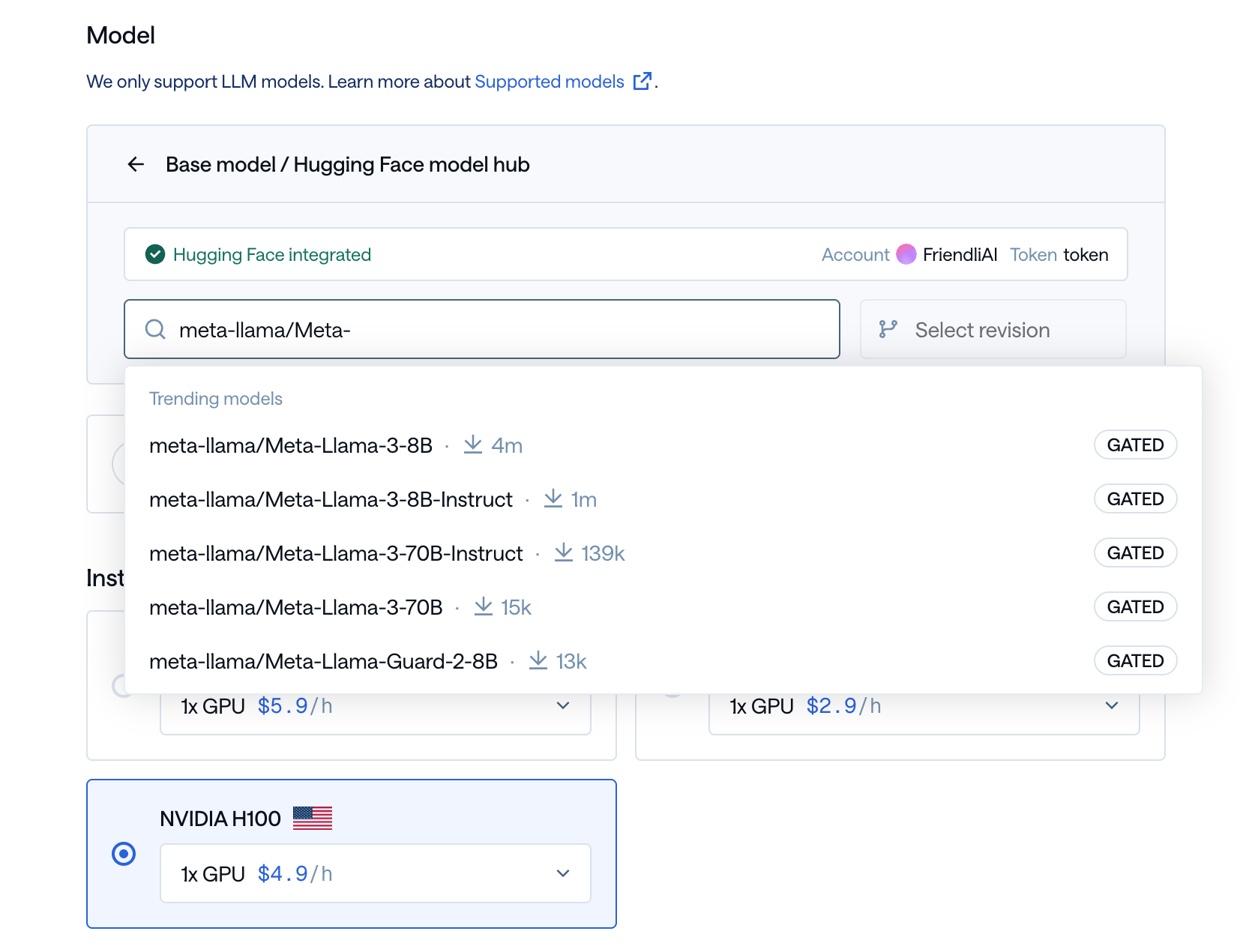

- Model Repository: Select “Hugging Face” as the model provider.

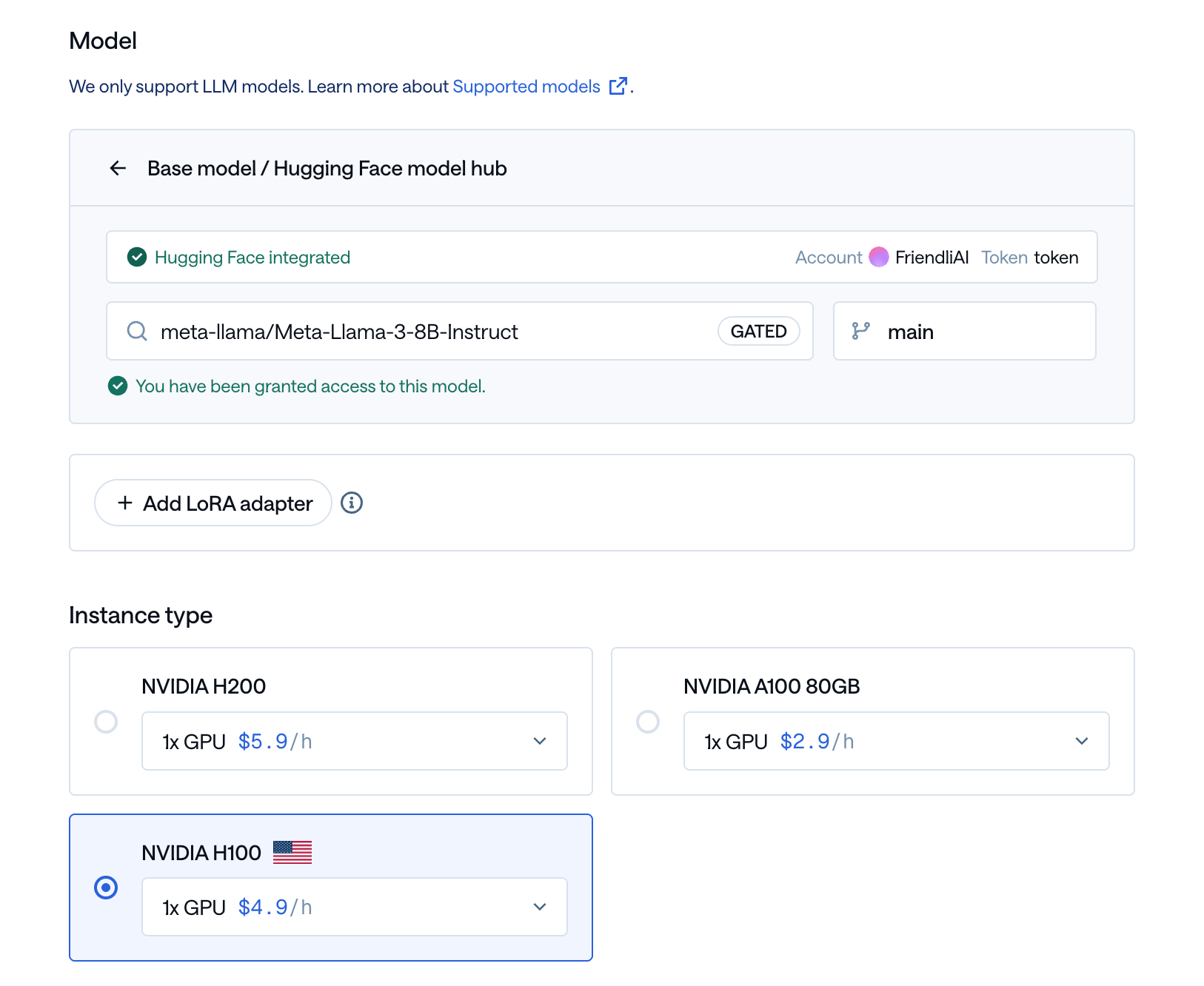

- Model ID: Enter “meta-llama/Meta-Llama-3-8B-Instruct” as the model id. As the search bar loads the list, click on the top result that exactly matches the repository id.

By default, the model pulls the latest commit on the default branch of the model. You may manually select a specific branch / tag / commit instead.If you’re using your own model, check Format Requirements for requirements.

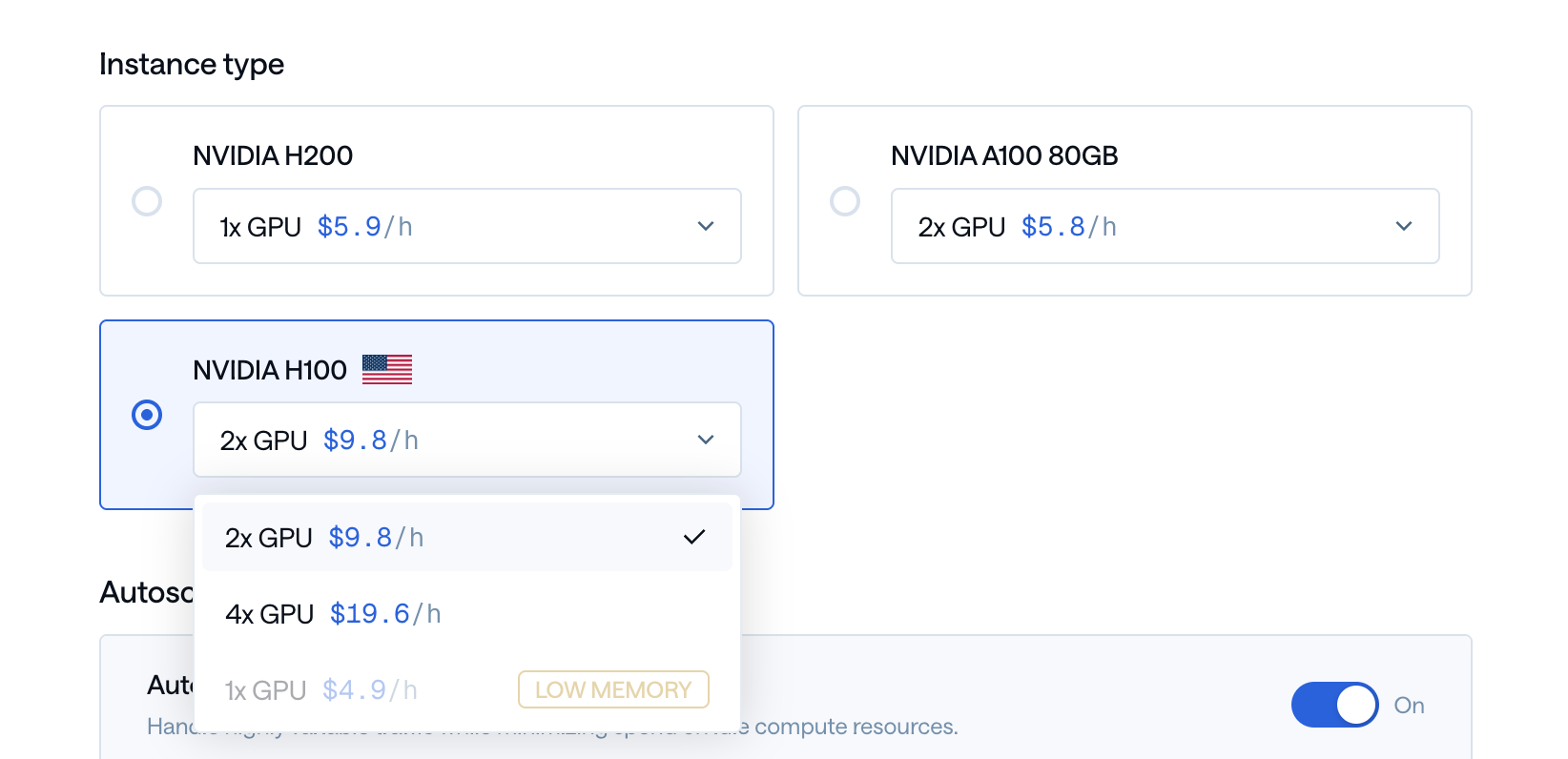

- Select the instance:

- Instance configuration: Choose a suitable instance type based on your performance requirements. We suggest 1x A100 80G for most models.

In some cases where the model’s size is big, some options may be restricted as they are guaranteed to not run due to insufficient VRAM.

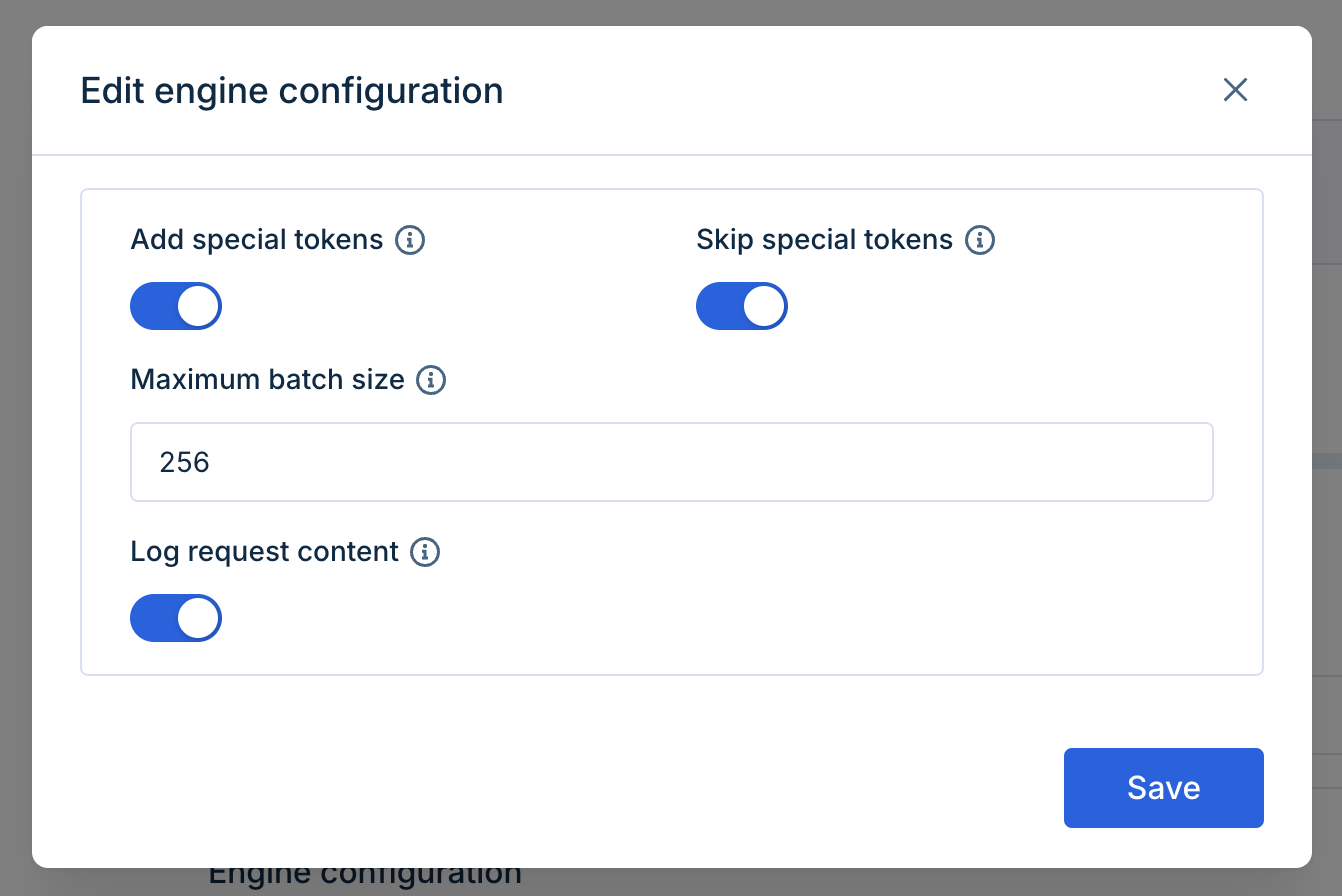

- Edit the configurations:

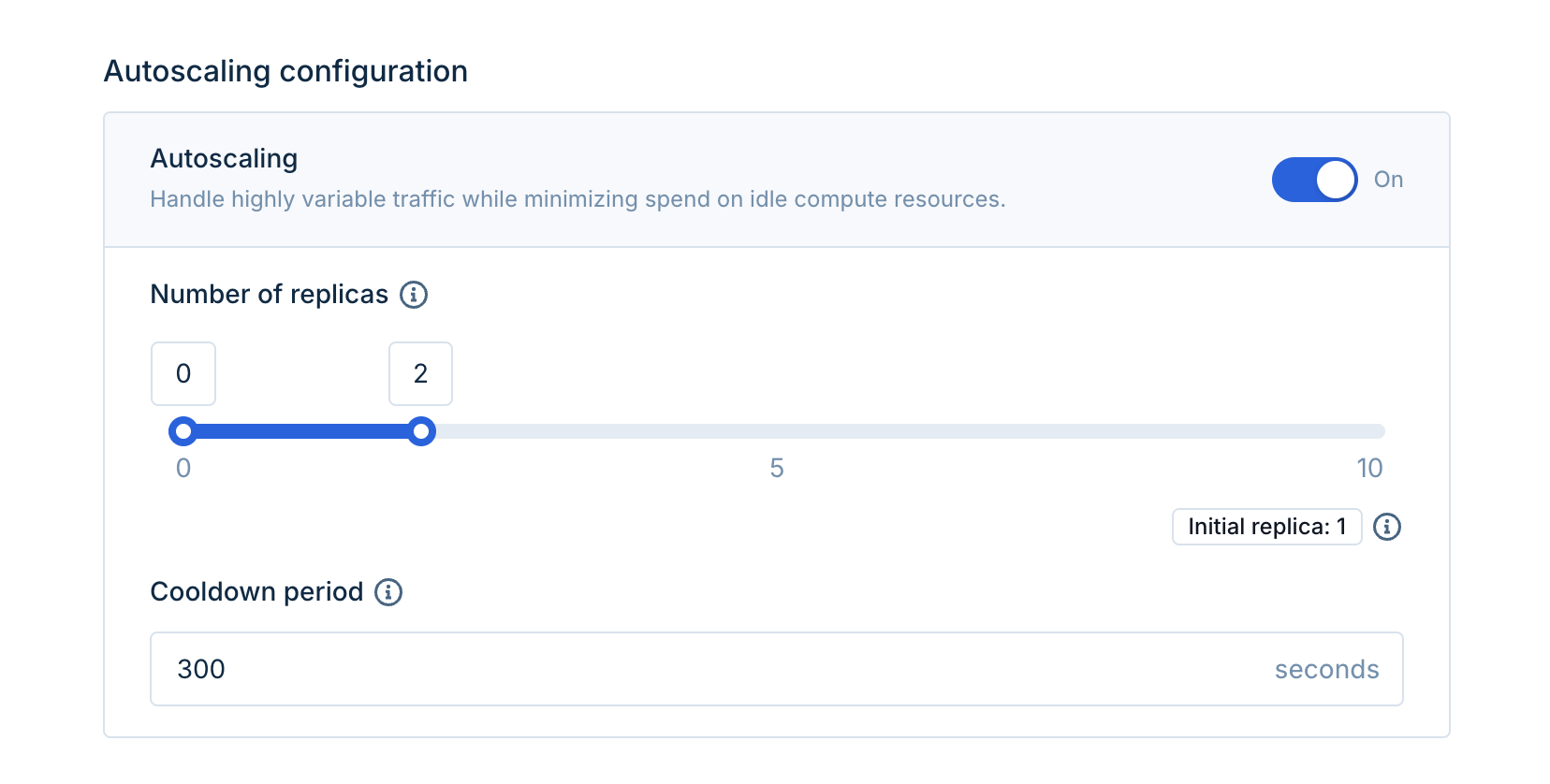

- Autoscaling: By default, the autoscaling ranges from 0 to 2 replicas. This means that the deployment will sleep when it’s not being used, which reduces cost.

- Advanced configuration: Some LLM options including the batch size and token configurations are mutable. For this tutorial, we’ll leave it as-is.

- Click ‘Create’ to create a new endpoint.

Step 2: Test the endpoint

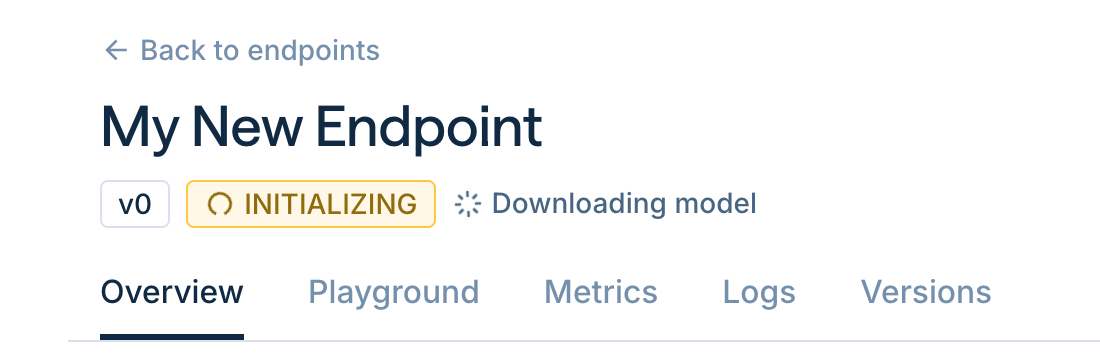

- Wait for the deployment to be created and initialized. This may take a few minutes.

You may check the status by the indicator under the endpoint’s name.

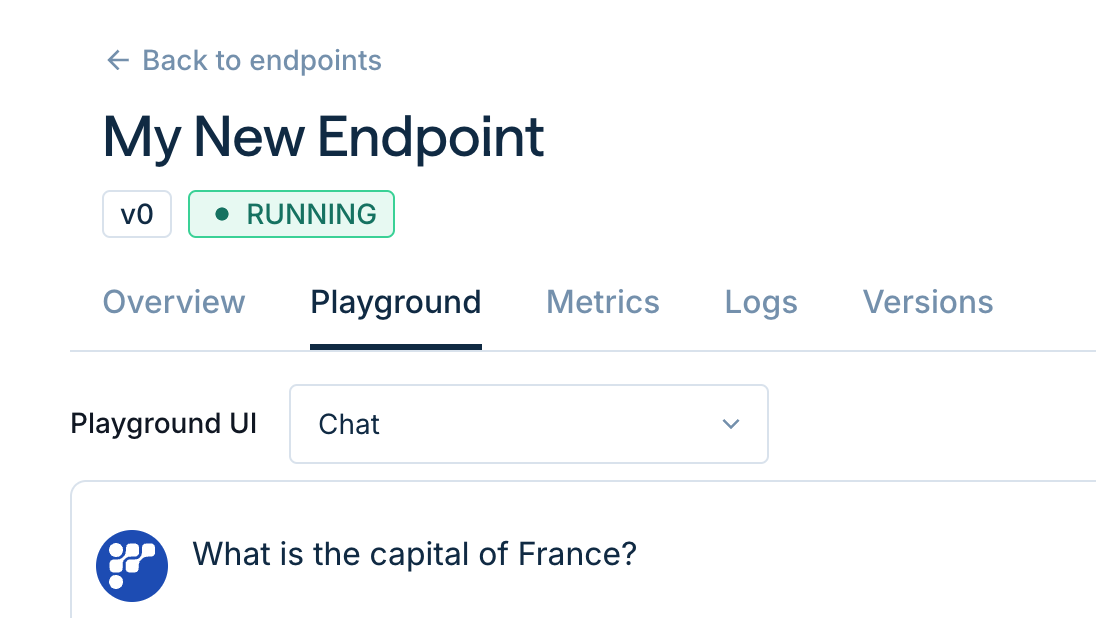

- In the “Playground” section, you may enter a sample input prompt (e.g., “What is the capital of France?”).

- Click on the right arrow button to send the inference request.

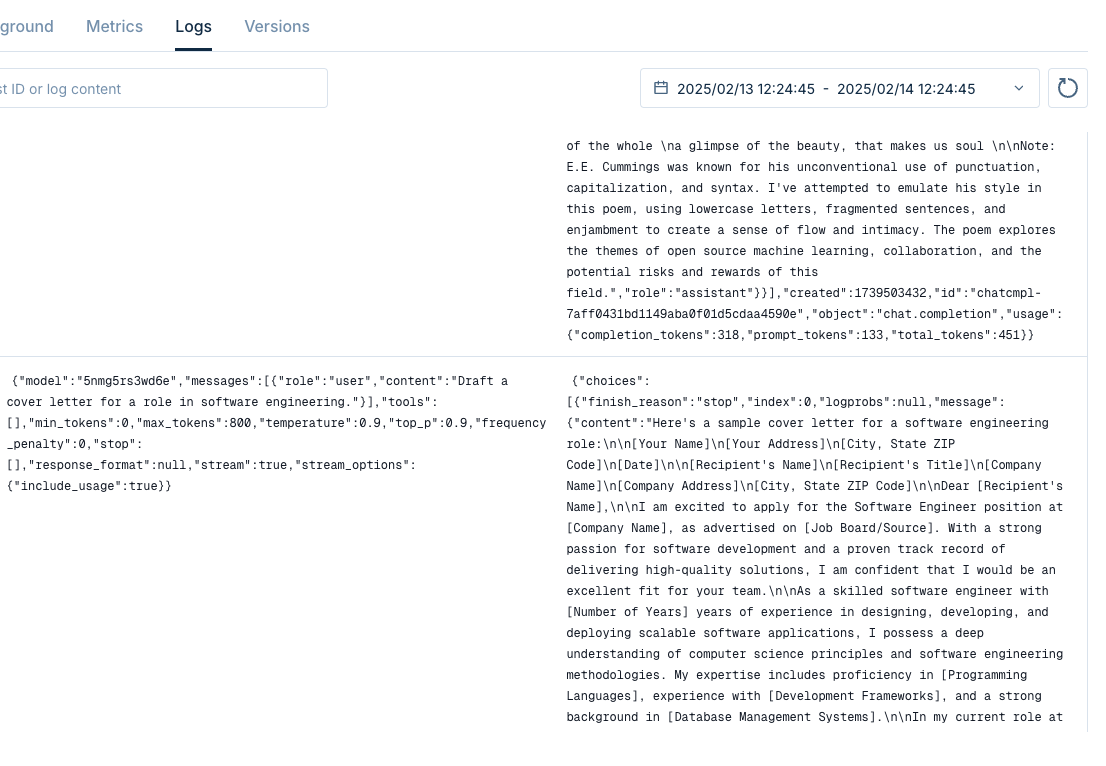

- If you are an enterprise user, you can use the “Metrics” and “Logs” section to monitor the endpoint.

Step 3: Send requests by using cURL or Python

- As instructed in our API docs, you can send instructions with the following code:

Step 4: Update the endpoint

- You can update the model and change almost everything by clicking the update button.